How can we best regulate artificial intelligence (AI)

The call for regulation of artificial intelligence (AI) comes from several concerns:

- Ethical Concerns: AI applications can impact society in profound ways. For instance, facial recognition technology raises concerns about privacy and surveillance. Algorithms used in hiring, lending, or criminal justice can potentially discriminate against certain groups if they are trained on biased data.

- Safety and Reliability: As AI becomes more advanced, there's a growing need to ensure these systems perform reliably and safely. Autonomous vehicles, for example, have to make complex decisions in real-time with human lives at stake. Poorly designed or malfunctioning AI can pose significant risks.

- Accountability and Transparency: AI systems, particularly those using machine learning, can be opaque, leading to what is often referred to as the "black box" problem. It can be difficult to understand how these systems make decisions, which can lead to issues when things go wrong. Who is held accountable when an AI makes a mistake?

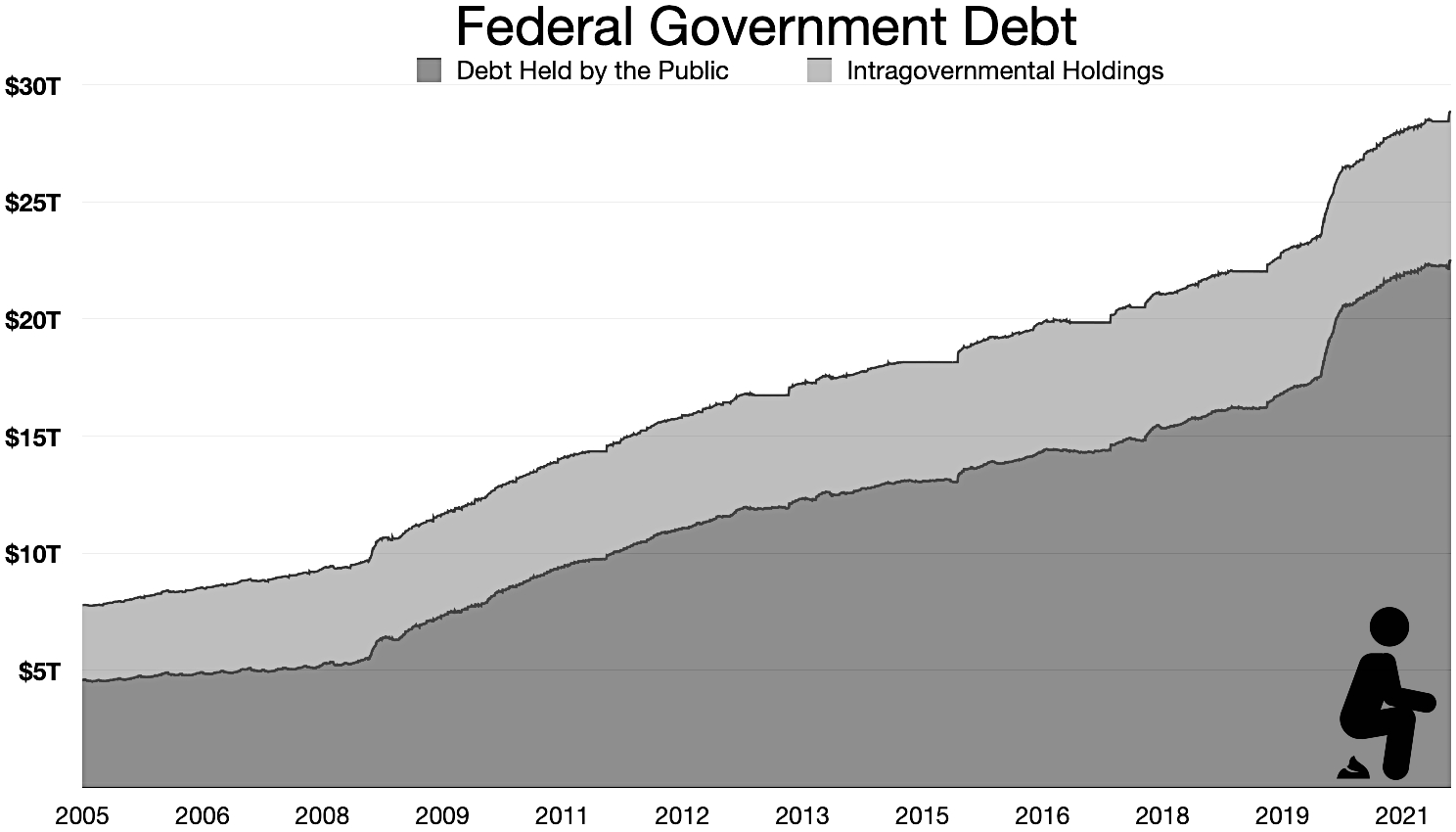

- Economic Impact: AI can significantly disrupt labor markets by automating certain jobs, leading to job displacement. Regulations might be needed to manage these transitions and ensure benefits of AI are distributed equitably.

- Security Concerns: AI technologies can be used maliciously, like deepfakes used for misinformation campaigns, autonomous weapons, or AI-enabled cyberattacks. Regulations can help mitigate these threats.

- Long-term Existential Risks: Some researchers worry about the possibility of developing superintelligent AI that could pose a risk to humanity if not properly controlled, although this is a controversial and speculative scenario.

Regulating AI is a complex and multifaceted task. It requires input from many stakeholders, including governments, industries, academia, civil society, and the general public. Here are some key considerations:

- Fostering Collaboration: Develop international standards and best practices for AI through collaboration among governments, tech companies, academic institutions, and non-governmental organizations. This can help ensure that regulations are comprehensive, effective, and fair.

- Defining Ethics and Transparency: AI systems should be designed and used in ways that respect human rights, including privacy and non-discrimination. Transparency in AI refers to the clarity and understandability of AI systems and their decision-making processes. This is critical for public trust and accountability. Creating ethical guidelines can help ensure that AI systems are developed and used responsibly.

- Risk Assessment: Assess the potential risks and impacts of AI technologies. This should include considerations of how they may be misused or how they could go wrong, as well as their potential social, economic, and environmental impacts.

- Robust and Representative Data: Regulate the quality and representation of the data used to train AI systems. Misrepresentation or bias in data can lead to unfair or discriminatory outcomes. Additionally, data privacy should be a core consideration.

- Setting Boundaries: Define the scope and limitations of AI usage in sensitive areas like health care, education, finance, and military. Guidelines and regulations can help manage the potential risks and ethical issues.

- Accountability and Liability: Establish clear rules about who is responsible when an AI system causes harm or makes a mistake. This is a complex issue, particularly when AI systems learn and evolve over time.

- Public Participation: Engage the public in decision-making processes about AI regulation. This can help ensure that regulations reflect the values and needs of society, and that they are understood and accepted by the public.

- Continuous Learning and Adaptation: Given the rapid pace of AI development, regulatory frameworks need to be able to evolve over time. This could involve establishing mechanisms for ongoing monitoring, evaluation, and revision of regulations.

- Education and Training: Investing in education and training can help society understand AI technologies and their implications. This includes training for regulatory and policy professionals, so that they can make informed decisions about AI regulation.

- Creating Legal Frameworks: Legal frameworks should be designed to protect individual and societal rights, without hindering innovation and progress. Regulations should be designed in a way that is technology-neutral and principle-based, to allow for the accommodation of future developments.

These are general suggestions, and the specifics will vary depending on the context and the specific AI technologies in question. The important point is that regulation of AI should be a cooperative, informed, and ongoing process.

Should AI development stop until regulations are put into place

While it's understandable to have concerns about the unregulated development of AI, completely halting AI development until regulations are in place may not be feasible or desirable for several reasons:

- Innovation and Progress: AI has the potential to bring enormous benefits to society, including in fields like healthcare, education, and climate change mitigation. Stopping AI development could delay these benefits.

- Practical Challenges: AI development is a global and decentralized process, involving countless individuals, companies, and countries. Coordinating a global halt to AI development would be extraordinarily difficult, if not impossible.

- Regulatory Development: In order to develop effective regulations, lawmakers need to understand the technologies they're regulating. This often involves observing how they develop and are used in practice. Stopping AI development could limit the opportunities for this learning process.

- Global Competitiveness: Countries or regions that stop AI development could fall behind in the global AI race, with potentially significant economic and strategic consequences.

Instead, a more balanced approach could be to continue AI development while also working urgently to put regulations in place. This could involve, for example:

- Guidelines and Self-Regulation: In the absence of formal regulations, developers can follow existing ethical guidelines and best practices for AI. Companies and research institutions can also implement their own internal review and oversight mechanisms.

- Collaboration and Dialogue: Developers, policymakers, civil society, and the public can engage in ongoing dialogue about AI and its implications. This can help to identify and address concerns early, and to shape the development of regulations.

- Risk Assessment and Mitigation: Developers can take steps to assess and mitigate the risks of their AI systems, even before regulations are in place.

- Transparency and Accountability: Developers can be transparent about their methods, objectives, and results, and can take responsibility for the impacts of their AI systems.

- Education and Training: Investment in education and training can help society to understand AI and to make informed decisions about its regulation.

This approach allows for the continued development and beneficial use of AI, while also working to ensure that it is developed and used responsibly.